a blog about unixy stuff and so on

2011/11/27Correction regarding http-headers

I do some talks on subject of SSL, but sometimes errors do occur. One is that i talk about a header called "cache-type: public" to provide caching for nss-based browsers. This is incorrect, the correct header name is "cache-control: public". Sorry for the inconvenience. 2011/06/09More SSL/TLS/HTTPS benchmarks

In an attempt to dispel the myths about issues with https and performance, I've recently held a talk at optimera stockholm on the subject. This blogpost will describe how the benchmarks was done which hardware was used, and some details about the network setup.

Test setup

The test was conducted between a mac book pro, with a core 2 duo cpu running at 2.4Ghz. (client) and an quad intel cpu, running FreeBSD 8.2 (server). The network was a switched network at 100Mbit.

dmesg | grep -i cpu CPU: Intel(R) Core(TM)2 Quad CPU Q6600 @ 2.40GHz (2394.01-MHz K8-class CPU) FreeBSD/SMP: Multiprocessor System Detected: 4 CPUs

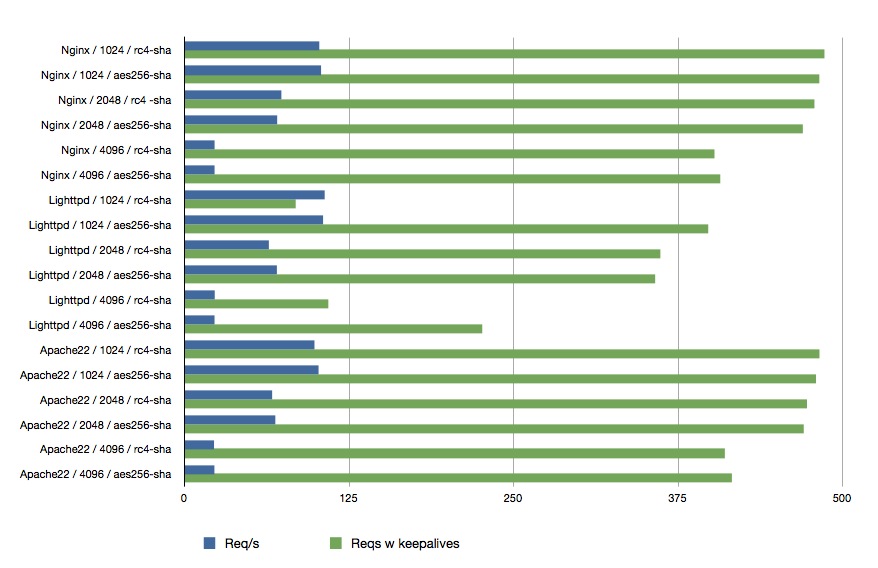

To perform the test, romab.com:s index.html was fetched 10000 times, both concurrently, in sequence, and with and without keep-alives. Three webservers was tested: apache22, lighttpd 1.4.27 and nginx 1.0.2. Each server was then tested with multiple settings, two ciphers was used - aes256 and rc4. In addition, 1024, 2048, and 4096 bit public key lenghts was used to see how the public key lenght impacted the performance.

The first test was to test sequencial connection from a client. Note that this does not really produce a realistic workload as a high load website will most likely have 100s of connection in parallell. Regardless, it gives us valuable insight in how well the servers perform

Now, that is interesting. We see that all webservers gains an massive performance increase when keep alives are enabled. We can also notice that ciphers doesn't impact performance that much, but the lenght of the public key sure does! Lighttpd did perform really bad here, and i actually redid the benchmarks, but no - still does not perform well. This might be due to my lack of knowledge on how to configure lighttpd, or that there is a bug with lighttpd on fbsd/ssl or simply that lighttpd does not perform verry well when dealing with https.

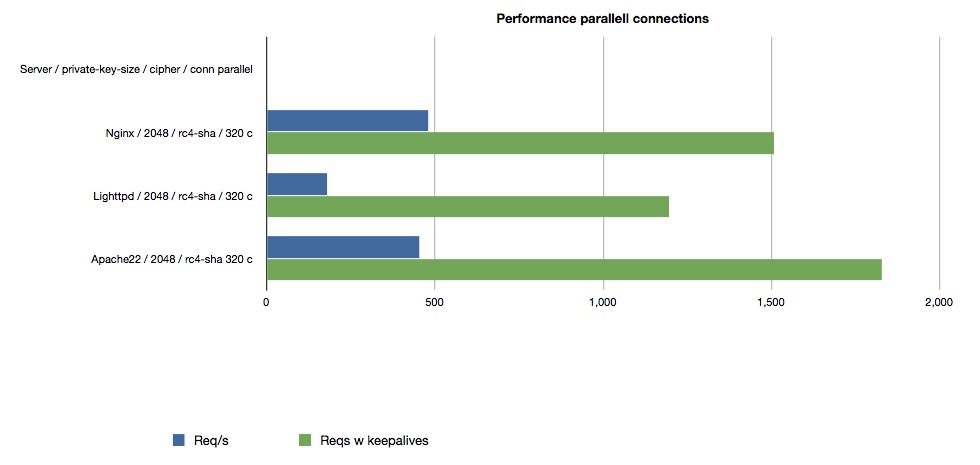

To gain a realistic workload we need to add massive amount of clients in parallell, and this is where we start seeing a difference on the servers; apache doesnt allow you to set maxclients above 256, and lighttpd stops giving reliable responses after 400 clients. Otoh, nginx just keeps on going. parallell clients used against nginx was 800, after that i stopped doing benchmarks. The picture bellow illustrates 320 parallell connections, the highest i could go before apache threw in the towel.

The software used to do the actual tests was the apache bench program, or "ab" as it is called on most unix systems. All graphs seen on this page are created from data that ab produces. Here is a sample on how the raw data looks:

This is ApacheBench, Version 2.3 <$Revision: 655654 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking nsa.research.romab.com (be patient)

Server Software: nginx/1.0.2

Server Hostname: nsa.research.romab.com

Server Port: 443

SSL/TLS Protocol: TLSv1/SSLv3,RC4-SHA,2048,128

Document Path: /index.html

Document Length: 5496 bytes

Concurrency Level: 800

Time taken for tests: 11.310 seconds

Complete requests: 10000

Failed requests: 0

Write errors: 0

Keep-Alive requests: 10000

Total transferred: 57131424 bytes

HTML transferred: 54970992 bytes

Requests per second: 884.17 [#/sec] (mean)

Time per request: 904.801 [ms] (mean)

Time per request: 1.131 [ms] (mean, across all concurrent requests)

Transfer rate: 4933.01 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 353 1238.8 0 6437

Processing: 196 534 540.1 390 3240

Waiting: 196 534 540.1 390 3240

Total: 196 887 1676.8 390 6972

Percentage of the requests served within a certain time (ms)

50% 390

66% 390

75% 391

80% 392

90% 395

95% 6674

98% 6924

99% 6949

100% 6972 (longest request)

2011/05/04

New PGP Key

Since my PGP key is about to expire, i've decided to roll it overÂ. For the full key transistion see

keyrollover-andreas.txt.asc, and get the key at https://www.romab.com/andreas.gpg. 2011/03/28HTTPS Obsession

As wiretapping is getting more and more common - or more correctly - as I am becomming less and less naive, i've started to appreciate the HTTPS-everywhere extension for firefox more and more. This have more or less resulted in two things. 1) I get annoyed by sites that do not support https. 2) If I find a site that does support https, I write a rule for it, which I then try to commit to mainline.

Of course, this have the added benefit of providing end-to-end security, preventing firesheep-style attacks, both also ensures that the content requested is the content recieved. This of course requires that the CA:s are behaving, but that is another story.

Different rules apply.

HTTPS and HTTPS Everywhere is nice if you value privacy , and depending on your choices in life, this or this rule might apply for you. Or perhaps you like a little bit of everything and will enjoy both. :-)

2011/03/21HTTPS and Performance

Many people state that performance is the main issue that prevent them from enabling HTTPS on the websites. Sure, the difference from doing a simple http get and a full TLS handshake and then a http get is quite huge, especially since it's performing the handshake for every connection. This means that the TLS penalty needs to be paid for every time the user gets a javascript, a stylesheet, a picture etc.

Using the Apache Bench program, we can quite easily determine just how high the performance impact is by comparing TLS with non TLS communication.

The test was done against ROMABs webserver from our office network. Here are some results:

minuteman:https-benchmarks andreas$ ab -n 10000 http://www.romab.com/index.html

This is ApacheBench, Version 2.3 <$Revision: 655654 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking www.romab.com (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: lighttpd/1.4.28

Server Hostname: www.romab.com

Server Port: 80

Document Path: /index.html

Document Length: 5569 bytes

Concurrency Level: 1

Time taken for tests: 79.515 seconds

Complete requests: 10000

Failed requests: 0

Write errors: 0

Total transferred: 58040000 bytes

HTML transferred: 55690000 bytes

Requests per second: 125.76 [#/sec] (mean)

Time per request: 7.951 [ms] (mean)

Time per request: 7.951 [ms] (mean, across all concurrent requests)

Transfer rate: 712.82 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 6 160.5 1 10989

Processing: 2 2 2.6 2 257

Waiting: 0 1 0.4 1 33

Total: 2 8 160.5 3 10991

Percentage of the requests served within a certain time (ms)

50% 3

66% 3

75% 3

80% 3

90% 4

95% 4

98% 4

99% 4

100% 10991 (longest request)

Ok, without doing any deeper analysis, we can see that the important one there is requests per second, (125/sec). Now lets do the same test against With TLS

minuteman:https-benchmarks andreas$ ab -n 10000 https://www.romab.com/index.html

This is ApacheBench, Version 2.3 <$Revision: 655654 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking www.romab.com (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: lighttpd/1.4.28

Server Hostname: www.romab.com

Server Port: 443

SSL/TLS Protocol: TLSv1/SSLv3,AES256-SHA,4096,256

Document Path: /index.html

Document Length: 5569 bytes

Concurrency Level: 1

Time taken for tests: 1476.578 seconds

Complete requests: 10000

Failed requests: 0

Write errors: 0

Total transferred: 58660000 bytes

HTML transferred: 55690000 bytes

Requests per second: 6.77 [#/sec] (mean)

Time per request: 147.658 [ms] (mean)

Time per request: 147.658 [ms] (mean, across all concurrent requests)

Transfer rate: 38.80 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 143 145 3.4 145 415

Processing: 2 2 1.5 2 134

Waiting: 0 1 1.4 1 133

Total: 145 147 3.8 147 417

Percentage of the requests served within a certain time (ms)

50% 147

66% 147

75% 147

80% 148

90% 148

95% 149

98% 155

99% 155

100% 417 (longest request)

OUCH! we went from 125 requests per second, to 6.7 requests per second. In addition, the time per request skyrocketed. The reason for this is that we are performing a new TLS handshake for each connection, which is costly.

We can however get significant performance imporvements to this by enabling Keep-alives, and letting the client reuse the TLS session already open.

minuteman:https-benchmarks andreas$ ab -k -n 10000 https://www.romab.com/index.html

This is ApacheBench, Version 2.3 <$Revision: 655654 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking www.romab.com (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: lighttpd/1.4.28

Server Hostname: www.romab.com

Server Port: 443

SSL/TLS Protocol: TLSv1/SSLv3,AES256-SHA,4096,256

Document Path: /index.html

Document Length: 5569 bytes

Concurrency Level: 1

Time taken for tests: 105.949 seconds

Complete requests: 10000

Failed requests: 0

Write errors: 0

Keep-Alive requests: 9412

Total transferred: 58707060 bytes

HTML transferred: 55690000 bytes

Requests per second: 94.39 [#/sec] (mean)

Time per request: 10.595 [ms] (mean)

Time per request: 10.595 [ms] (mean, across all concurrent requests)

Transfer rate: 541.12 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 9 34.4 0 447

Processing: 2 2 0.1 2 3

Waiting: 1 1 0.1 1 3

Total: 2 11 34.4 2 449

Percentage of the requests served within a certain time (ms)

50% 2

66% 2

75% 2

80% 2

90% 2

95% 146

98% 147

99% 147

100% 449 (longest request)

Quite the improvement! In reality however, keep alives are almost always enabled by default so there a rarely any need to tune it. The big gains are instead usually gained by using agressive caching of javascript, images and stylesheets. Other type of static content can of course also be cached such as iframes that never change. This also have the bennefit of improving performance for non-https acessed content as well.

| HTTP | HTTPS | HTTPS with keepalives | |

| Requests per second | 125.76 | 6.77 | 94.39 |

| Time per request | 7.951ms | 147.658ms | 10.595ms |

| Time taken for tests | 79.515s | 1476.578 | 105.949s |

IronAdium 0.4.1 released

IronAdium have been in a great need for some love, and while some stuff have been fixed in current, it is way overdue for a release. Today Ironadium 0.4.1 was relased, and it does have some new goodies, mostly in the config & stability department.

The "big" feature is that there is now possible to send and recieve file thrue ironadium, something that have prevent a few users from using secure im. Not so anymore. For all the details, install instructions and documentation, check out IronAdium here.

2010/11/24Ironfox now backported to 10.5 and some info about firefox 4

Ok, i haven't blogged in a while, and not updated on Ironfox either. The good news is that i have been bussy. The wrapper have been completly rewritten which will reduce startup time (marginaly probably), and there are now graphical error messages that will pop up if ironfox is unhappy about something. Hopefully you won't notice that :-)

But the biggest news is probably that ironfox have been backported to run on macosx 10.5. Now we need testers. lots of them. The software will be released this week. This and the possibility to add a list of directories that should be allowed for reading and writing are probably the only user visible changes.

Apart from that, ironfox have been tested internaly with firefox4 and if nothing dramatic happens, ironfox with ff4 support will be released the same day as firefox4. The reason that ironfox needs to be updated is because of mozillas new plugin architecture. As each plugin runs in its own separate pluginwrapper, ironfox needs to allow firefox to spawn that process. The good news for us security geeks is that we can contrain the plugins in their own sandbox. This means that even if you allow firefox to write files to your desktop, you don't necessarly need to allow flash to have those rights. Hopefully this will make it much harder to own the browser, as attack vectors get reduced from plugin to plugin ipc with browser :-)

2010/11/04IronFox 0.8.2 Released - Among other things.

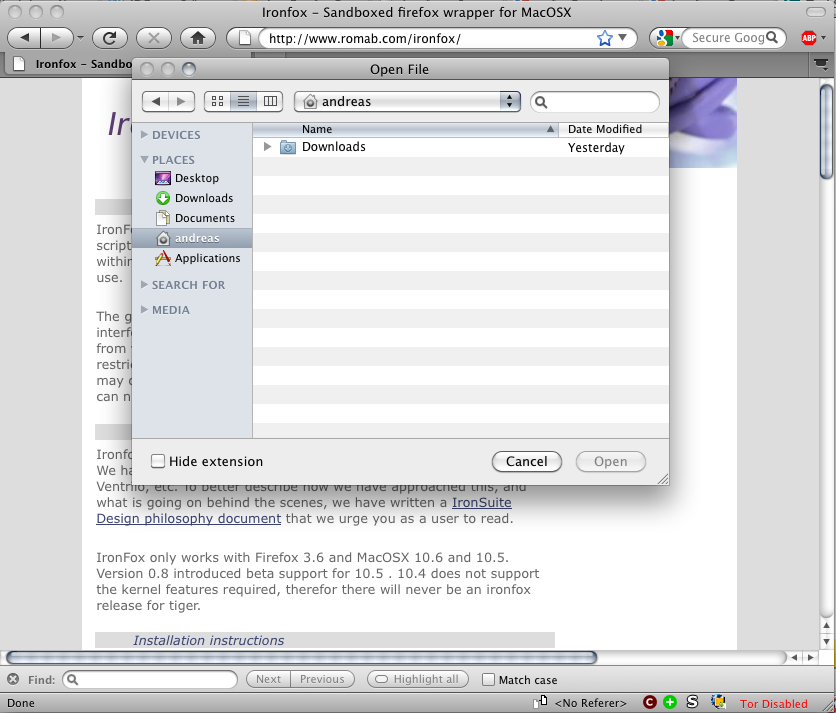

An interesting problem when running sandbox application wrappers is that you might have hard time confirming if your application is sandbox or not. The new policy (or more correctly, updated policy) forbids firefox from reading any information from your home directory, and will only display directories allowed for reading and writing (such as the Download-directory). Should you ever be in doubt, simply press cmd+o and if no files are present in the homedir, you can expect the browser to be sandboxed. (of course, you can always read /var/log/system.log if you are in debug mode :-)

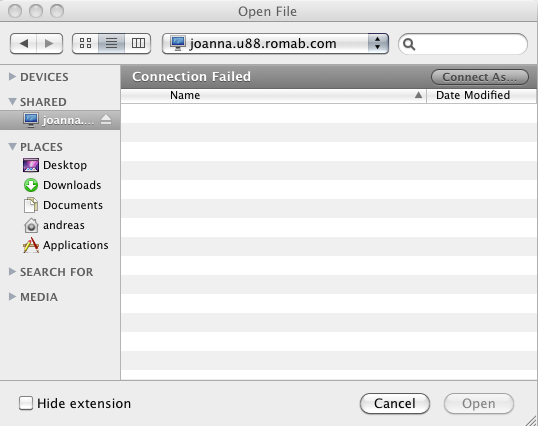

Another interesting property with macos is that network shares are displayed regardless if you allow them to be read or not. This of course does not mean that the firefox can access the share, as is displayed here:

But what is happening behind the scenes? Let's find out what it is actually being denied here. Note that this list is bit shortened down to make it easier to read. If you want to know all the gory details, it is present in /var/log/system.log if you run ironfox in debug mode. Note that most of this stuff isnt in firefox, but in the api ff uses to access these resources.

sandboxd[13803]: firefox-bin(20023) deny mach-lookup com.apple.FSEvents sandboxd[13803]: firefox-bin(20023) deny file-read-data /Users/andreas/Library/Preferences/com.apple.finder.plist

So the sandbox denies the browser access to the mach-port com.apple.FSEvents. We can guess by the names that this is finder related, and assume that finder wants to give us info about the smb share.

firefox-bin[20023]: __SCPreferencesCreate open() failed: Operation not permitted /Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: SCPreferencesCreate failed: Failed! sandboxd[13803]: firefox-bin(20023) deny file-read-data /Library/Preferences/SystemConfiguration/com.apple.smb.server.plist firefox-bin[20023]: __SCPreferencesCreate open() failed: Operation not permitted sandboxd[13803]: firefox-bin(20023) deny file-read-data /Library/Preferences/SystemConfiguration/com.apple.smb.server.plist sandboxd[13803]: firefox-bin(20023) deny file-read-data /Library/Filesystems/NetFSPlugins

Firefox complains that SCPreferencesCreate fails due to the lack of permission. No wonder. We are not allowed to read anything about smb/cifs.

/Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: Failed to get user access for mount /Volumes/andreas sandboxd[13803]: firefox-bin(20023) deny system-fsctl sandboxd[13803]: firefox-bin(20023) deny file-read-data /private/var/db/smb.conf

Interesting, firefox is not allowed to access this usermount, and the sandbox denies it system-fsctl(2), which is a system call for manipulating the filresystem controlling mounted filesystem. We are also denied reading the samba configuration file.

/Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: 1025 failures to open smb device: syserr = No such file or directory /Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: netfs_GetServerInfo returned 2Firefox complains about the lack of a smb device. News for me that apple had one of those.

sandboxd[13803]: firefox-bin(20023) deny network-inbound 0.0.0.0:52129 sandboxd[13803]: firefox-bin(20023) deny file-read-data /dev/nsmb0

Firefox listening on my network? No. Here we also find the device that firefox was complaining about not being able to open.

/Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: Failed to get user access for mount /Volumes/andreas sandboxd[13803]: firefox-bin(20023) deny file-read-data /Library/Filesystems/NetFSPlugins sandboxd[13803]: firefox-bin(20023) deny system-fsctl sandboxd[13803]: firefox-bin(20023) deny file-read-data /private/var/db/smb.conf sandboxd[13803]: firefox-bin(20023) deny network-inbound 0.0.0.0:59283 sandboxd[13803]: firefox-bin(20023) deny file-read-data /dev/nsmb0

Hmmm. It didn't work the first time, but the api will ensure that several attempts are done before it gives up.

/Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: 1025 failures to open smb device: syserr = No such file or directory sandboxd[13803]: firefox-bin(20023) deny file-read-data /dev/nsmb0 /Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: 1025 failures to open smb device: syserr = No such file or directory /Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: netfs_GetServerInfo returned 2 sandboxd[13803]: firefox-bin(20023) deny mach-lookup com.apple.netauth.useragent /Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: SharePointBrowser::handleOpenCallBack returned -6600

Wow. SharePointBrowser. More news fore me. Firefox concludes that the returncode -6600 is not normal. The sandbox denies access to the IPC to mach port com.apple.netauth.useragent, which we can guess would have handled authorization for us to the share.

sandboxd[13803]: firefox-bin(20023) deny mach-lookup com.apple.netauth.useragent sandboxd[13803]: firefox-bin(20023) deny network-inbound 10.100.100.88:55691 sandboxd[13803]: firefox-bin(20023) deny network-inbound 10.100.100.88:55690 firefox-bin[20023]: __SCPreferencesCreate open() failed: Operation not permitted /Applications/IronFox.app/Contents/Resources/IronFox.app/Contents/MacOS/firefox-bin[20023]: SCPreferencesCreate failed: Failed! sandboxd[13831]: firefox-bin(20023) deny mach-lookup com.apple.FSEvents

Another access atempt to the IPC com.apple.netauth.useragent is being denied, also the possibility to open listening sockets on my ip adress on port 55691 and 55690. SCPreferencesCreate fails, and we are still not allowed to access FSEvents. So there we go. A quick summary of what happens when you try to browse a network share in OSX when you are not allowed to.

2010/04/21More ironfox modules.

Ironfox now supports a few new modules, which allow for kerberos/spnego auth and the propriatry 1passwd plugin. Nexus personal support is also in the works, and will most likely be included in the 0.4 release.

Another idea is to add a gui for configuration instead of allowing all functionality. This would most likely include some sort of menu for configuring which plugins that are to be allowed. Ironfox now supports (besides basic firefox functionality) java, flash, kerberos & 1passwd. If i can find any site besides apple.com that uses quicktime, it will most likely be added aswell.

2010/04/06Ironfox 0.3 released

For those interested with playing around with a sandboxed firefox, ironfox is now available. It's only available for osx 10.6. Note that the app requires firefox 3.6 to be installed in /Applications for it to work. 2009/07/10Using Active Directory as an LDAP server for unix

Using AD as an ldapserver is quite usefull, as you do not need to manage users on all hosts, nor do you need to configure printers, groups etc in more than one place. It also allows for central authentication for all users, ie, they have the same password in unix and windows. It is recommended that you use kerberos instead of ldap for autentication however.Sometimes there are reasons to just go with ldap, and not involve kerberos at all. This is usually the case when you are working in a nonfunctional organization where it is impossible for the unix admins to gain help from windows admins etc. Kerberos requires some fiddling with keytabs. More on that in a later post.

To get started, you first need to install services for unix on one ad-server. The installation forces a reboot, so keep that in mind when selecting the dc. The installation defaults are ok, as they do not start any services on the ad-server, except for the nfs client which can be disabled after installation. You can find sfu as a free download from MS here:

Once sfu is installed and the server is rebooted, you will have a new tab called unix attributes in active directory users and computers. Now we can start adding users that are needed for solaris. The first step is to create a proxy users that solaris will utilize to make ldap queries. Create a user that is a member of the "domain guests" security group. It does not need any more memberships.

Disable password expiration and enable that the user can not change his password. Be sure to remember the password. The boring windows-stuff is now completed and we can move on to confiugre solaris. The solaris version we are targeting is solaris 10. Note that solaris pre update 6 is quite buggy when it comes to ldap, so you might want to patch it up a bit before starting to play around with ldap.

First, start by editing /etc/nsswitch.ldap. This file will later overwrite nsswitch.conf when you start the ldapclient command. A good idea is to only use ldap for passwd and group entries. if you use the defaults, DNS will stop working, and a lot of stuff will be slower than neccessary.

here is an example of a nsswitch.ldap file that is basically a standard nsswitch.conf copied to nsswitch.ldap and then tweaked a bit.

bash-3.00# grep ldap /etc/nsswitch.ldap passwd: files ldap group: files ldap printers: user files ldap

The next step is to initialize the ldap client. This is a basic config that probably will work if you change romab domains for your own, but note that we are using simple binds here, which IS A BAD IDEA as passwords are sent in plain text to the ldap server.

ldapclient manual \ -a credentialLevel=proxy \ -a authenticationMethod=simple \ -a proxyDN=cn=proxyusr,cn=Users,dc=research,dc=romab,dc=com \ -a proxyPassword=yourpassword \ -a defaultSearchBase=dc=research,dc=romab,dc=com \ -a domainName=research.romab.com \ -a defaultServerList=10.200.2.3 \ -a attributeMap=group:userpassword=msSFU30Password \ -a attributeMap=group:memberuid=msSFU30MemberUid \ -a attributeMap=group:gidnumber=msSFU30GidNumber \ -a attributeMap=passwd:gecos=msSFU30Gecos \ -a attributeMap=passwd:gidnumber=msSFU30GidNumber \ -a attributeMap=passwd:uidnumber=msSFU30UidNumber \ -a attributeMap=passwd:uid=sAMAccountName \ -a attributeMap=passwd:homedirectory=msSFU30HomeDirectory \ -a attributeMap=passwd:loginshell=msSFU30LoginShell \ -a attributeMap=shadow:shadowflag=msSFU30ShadowFlag \ -a attributeMap=shadow:userpassword=msSFU30Password \ -a attributeMap=shadow:uid=sAMAccountName \ -a objectClassMap=group:posixGroup=group \ -a objectClassMap=passwd:posixAccount=user \ -a objectClassMap=shadow:shadowAccount=user \ -a serviceSearchDescriptor=passwd:cn=Users,dc=research,dc=romab,dc=com?sub \ -a serviceSearchDescriptor=group:cn=groups,dc=research,dc=romab,dc=com?sub

Does it work? A good test is to do a getent passwd username and see if the user appears correctly.

bash-3.00# grep andreas /etc/passwd bash-3.00# getent passwd andreas andreas:x:10000:2500::/export/home/andreas:/bin/sh

If that whent well, you only need to configure pam to start being able to log in with users configured in your active directory. Here is a sample pam configuration that you might want to use:

/etc/pam.conf: other auth required pam_unix_auth.so.1 server_policy other auth required pam_ldap.so.1

This enables pam authentication for ssh. If you need it for login, simply change the login directives as above.

/etc/pam.conf: login auth required pam_unix_auth.so.1 server_policy login auth required pam_ldap.so.1

This configuration has several security issues. Passwords are sent in clear text from the client(sol10) to the server(AD) so all users passwords will be exposed. This can however be fixed by using TLS:simple instead of a regular simple bind. It Also have the problem of the data being replied from the server can be manipulated in transit, which means that if we use solaris RBAC feature stored in the LDAP, additional roles may be added, or the uid number may be manipulated. Using TLS is a must if you are intending to use this.

Also note that the uid attribute is not indexed by the default SFU installation. For performance reasons, you might want to add this via the active directory schema snap-in. If it is not visible as an available snap-in, start cmd.exe and type "regsrv32 schmmgmt.dll" and it should become visible in the mmc.

There are several security considerations with this configuration. Besides not using TLS, the most noteable problem is that pam_ldap.so.1 in solaris ONLY handles authentication, there is no way that you can group users so that only certain user may log in to server X while other are allowed to server Y. A better approach is to use padls pam_ldap, which offers a lot more options. The best way is probably to use the combination of solaris nss_ldap, pam_ldap from padl and then use kerberos for autentication. It will give you centralized autentication and authorization, and will not send any passwords over the wire except when the proxy user binds, and when a users uses su. The su problem can be mitigated with kerberos aswell, but a compromised server will expose the principals password if su is used. 2002/09/09

Using Active directory as a unix KDC - Quick start

The use of kerberos in unix environments is often neglected due to the imagined complexity of configuring such an environment. Bellow is a step by step guide on information how to integrate kerberos via active directory with unix to provide users with a secure single sign-on experience.

While most guides, some of them good, others lacking detail, try to explain too much about the protocol, this guide tries to be quick and easy to use, and tries to avoid too many details and instead focus on actions that needs to be done.

The first thing that needs to be done is to create a shared secret between the unix host and the KDC/AD. This is done by using the ktpass.exe utility, bundled with 2003 in the support tools directory. Note that ktpass.exe is updated regularly, and using a version below the one bundled with 2003 r2 is not recommended, as it lacks certain options.

Create a service account In the active directory manage users and computers, create a new account, name it something like "host-$hostname" Make sure that the password does not expire, nor that the user can change it's password. Btw, it is a good idea to apply a domain policy to this user so that it can not be used for logins.

Extract a keytab by using ktpass.exe

Copy the keytab securely to the destination host, save it as /etc/krb5.keytab (or /etc/krb5/krb5.keytab,) depending on where the system krb5.conf specifies the keytab.

configure krb5.conf, enable gssapi in sshd, restart it and try to login. Easy as pie!